Smartphones have quickly evolved into the best point-and-shoot cameras ever made, but they might soon take another leap forward thanks to an innovative new Sony image sensor.

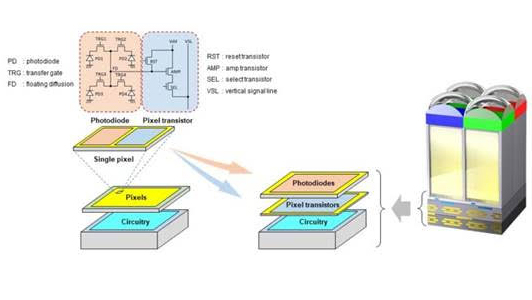

Sony's semiconductor division has just announced that it's made the world's first stacked CMOS sensor with two-layer transistor pixels. Today's CMOS sensors have both their photodiodes and pixel transistors on the same substrate (or layer), but Sony's new chip separates these onto two separate levels.

What does this mean for image quality? Sony says this new architecture doubles the saturation signal level of each pixel, effectively exposing them to twice as much light. This should significantly improve the sensor's dynamic range, while also freeing up enough room for larger amp transistors to help reduce night-time noise.

The benefits should be particularly apparent in high-contrast scenes, like those with bright sunlight and dark shadows, which earlier smartphones struggled with. Today's phones use clever multi-frame processing to improve their dynamic range, but this new Sony sensor should give their software a much better base to work from.

Sony hasn't yet said how close it is to mass-producing its new sensors, but did specify that its new two-layer transistor pixel tech would "contribute to the realization of increasingly high-quality imaging such as smartphone photographs".

This is significant because Sony is by far the biggest manufacturer of smartphone camera sensors. According to Statista figures, it has 42% of the global image sensor market, and recent teardowns of the iPhone 13 Pro Max show that it uses three Sony IMX 7-series sensors.

The new sensor could also be good news for mirrorless cameras, but the gains are likely to be most significant for smaller smartphone sensors – and that seems to be where Sony is focusing its attention to start with.

Analysis: A tech leap that's ideal for smartphones

The big problem that camera phones grapple with is getting enough light onto their sensors, without making the handset itself the size of a brick. Recently, some radical improvements in multi-frame processing have been the solution, but this new Sony sensor could be the first significant hardware leap we've seen for a while.

On the new Sony Xperia Pro-I, we saw Sony use a 1-inch sensor in one of its phones for the first time. But this also revealed the limitations of using the old-school approach of using larger sensors to gather more light. The Xperia Pro-I only uses a 12MP portion of that 20.1MP sensor – a phone that uses the whole 1-inch sensor would likely be prohibitively thick.

That's why this new stacked sensor is ideal for smartphones. It offers radically improved light-gathering powers compared to current CMOS sensors, but without significantly increasing the size of the chip itself.

So-called 'stacked' sensors have made big leaps in mirrorless cameras, too. Sony was again the pioneer here too, with the Sony A9 becoming the first full-frame camera to have a stacked chip in 2017. In this case, the breakthrough of the stacked design was adding a new layer of DRAM onto the sensor itself, which dramatically improved its read-out speeds.

This technology has powered the recent wave of flagship mirrorless cameras, which offer incredibly fast burst speeds and 8K video powers – with the Nikon Z9 even able to dispense with its mechanical shutter altogether, thanks to the improvements to electronic shutters that stacked sensors bring.

But the benefits of Sony's new two-layer transistor pixels are likely to be more in the realms of improved dynamic range and reduced noise for smartphones – and if this tech has as big an impact as Sony's mirrorless camera sensors, it could power another image quality leap for next-gen phones.

- These are the world's best camera phones

from TechRadar - All the latest technology news https://ift.tt/3yzTxSg